Sometimes a continuous integration/delivery scenario is more complex than just building a system in a multi-stage pipeline. The system may consist of several subsystems, or just complex components, each of which requires a build pipeline of their own. Once all systems pass through their respective build pipelines they are integrated together and subjected to a joint deployment and further testing. When facing such a scenario, I decided to build the simplest possible thing that would work and get the job done.

Basically the problem is this: a system may be constructed by several teams or just consist of several smaller systems or components – things that need compiling, testing, deploying, and some more testing. If all these stages succeed, there’ll be an artifact that constitutes a good version of the subsystem. Now, if several such artifacts exist, they need to be tested together. This testing may require deploying to a staging environment and a suite of automated end-to-end tests, manual testing, or both. The problem is that productive teams will push through numerous versions of working artifacts every day, and that the process that runs all of them together has to keep up.

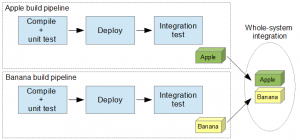

Let’s take a look at two teams working on systems Apple and Banana. Once they’re successfully built, they need to be integrated and tested together. If these tests pass, the system (fruit salad?) is deemed suitable for some exploratory testing or deployment.

How can the “Whole-system integration” process know when to pull in new versions of Apple and Banana, and how does it ensure that their artifacts are intact?

This is a well-known and solved problem. The solution is to store the artifacts produced by the pipelines in some central location and then have another process pick them up. This central location may be a local Maven or NuGet repository, a VCS repository, or just a directory. What’s important about either solution is that it always contains whole binaries, i.e. we don’t want to copy half a binary from that location only because it’s being copied to by another process.

What’s good about having some kind of repository in between the build pipelines is that it can store multiple versions of the artifacts. So, if build x runs successfully with artifacts Apple_134 and Banana_53, and build x + 1 fails for Apple_134 and Banana_54, then we know where to look and what artifacts to compare.

The second issue is that of tracking that location, be it a repository of some kind or a directory. Whenever its contents are updated a new integration build should be triggered.

Now, wouldn’t just terminating all build pipelines in the integration job do the trick? No! First, it’s not fair to “punish” all the individual subsystems by having them go red whenever integration fails. Second, what would happen to the job scheduling if all jobs ended in a single bottleneck? I don’t know how the build server would do the scheduling, and I’m not keen on finding out. Third, in bigger systems it wouldn’t be unreasonable to have multiple build servers in different locations. Then all they would need to do would be to push the finished artifacts to a central repository.

The rest of the post is about implementing what I think is the simplest possible solution to these problems. It may not be the most robust and “enterprisy”, but it sure provides a starting point.

Let’s go!

* * *

This tutorial requires that Java (the JDK) and Git are installed. It’s executed on a Windows machine, but would work equally well in a Unix/Linux environment with minor modifications to paths and some commands.

Setting up the Git repository

For this, we’re using a bare Git repository:

mkdir c:\repo & cd c:\repo & git init –bare

Jenkins configuration

Create a home for Jenkins and remember to set the JENKINS_HOME environment variable:

mkdir c:\jenkins & set JENKINS_HOME=c:\jenkins

Download the latest jenkins.war and save it in c:\jenkins.

Clone the repository. All jobs in Jenkins will be able to use it.

cd c:\jenkins & git clone c:\repo

Start Jenkins.

cd c:\jenkins java –jar jenkins.war

Open http://localhost:8080 in the browser. Once in Jenkins, install the “GIT Plugin” (Manage Jenkins/Manage Plugins). The “GIT Client Plugin” will be installed automatically.

Job configuration

Create a new job (freestyle project) called Apple. In the job configuration, select “Execute Windows batch command” in the build section. Paste this into the text box:

echo Apple%RANDOM% > apple jar -cf apple.jar apple copy apple.jar %JENKINS_HOME%\repo cd %JENKINS_HOME%\repo git add apple.jar git commit -m "Promoting Apple at %TIME%" apple.jar git push origin master

This sequence of commands is supposed to emulate the last step of a build pipeline, where a good version of an artifact is staged. The created artifact contains a random value to be different for every run. Java’s jar utility is used, since the tutorial requires Java to run Jenkins, so it might as well require the JDK, which contains jar.exe.

Trigger the job and verify that it runs.

Create a job called Banana and repeat the steps (changing “apple” to “banana” everywhere).

Finally, create a third job called Integration. Select Git in the Source Code Management section. Set Repository URL to c:\repo put “master” in the textbox labeled Branches to build.

In the Build Triggers section, select Poll SCM and type * * * * * in the schedule box. Jenkins will warn about this, but you want it to poll every minute for this experiment.

In the Build section, again select “Execute Windows batch command” and enter the following:

jar -xf apple.jar jar -xf banana.jar type apple banana

Trigger that build and note the values for Apple and Banana (visible in the console) . Now, trigger either of the Apple or Banana jobs, wait for the Integration job to execute and observe the results.

Conclusion

This simple simulation can be improved in several ways. I’m aware of that. Still it illustrates how two artifacts can by promoted to an integration build in a controlled fashion.

Thanks for a really good and practical example of CI solution in complex systems.

Like finding the last piece to the puzzle.