I gave a lightning talk at tonight’s Lean Tribe Gathering in Stockholm about A/B testing at King, how we develop games, features and decide which improvements to make. Here are my slides and notes from the presentation.

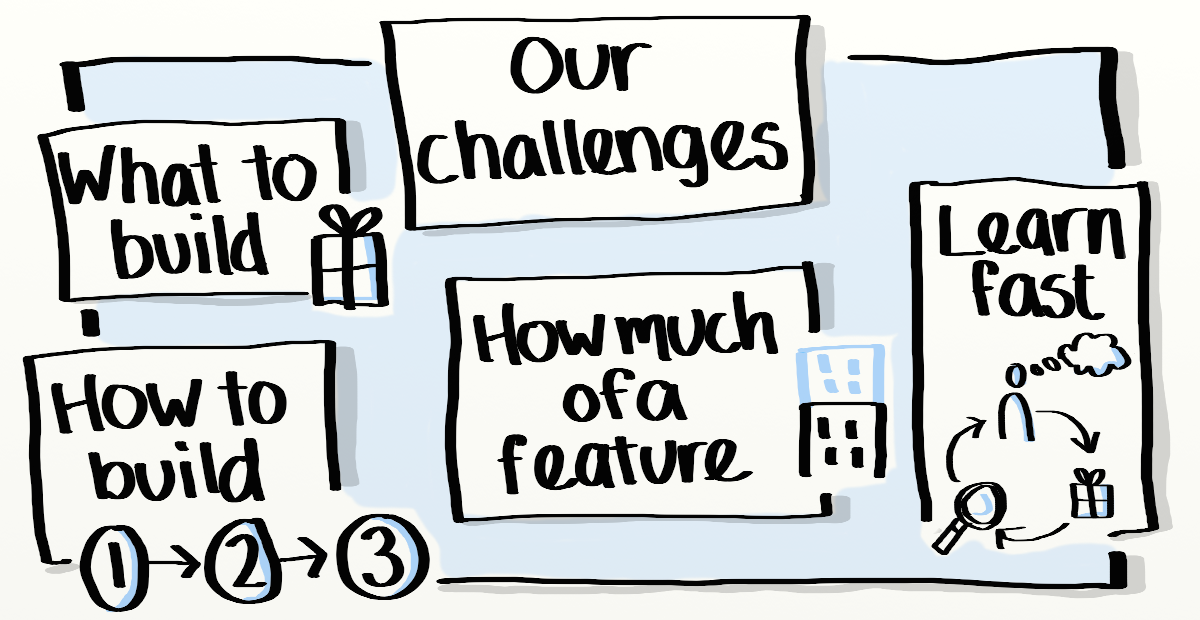

Building games at King we face challenges similar to most organizations:

- What should we build?

- How to build it?

- How much of a feature do we need to build?

- How do we learn fast?

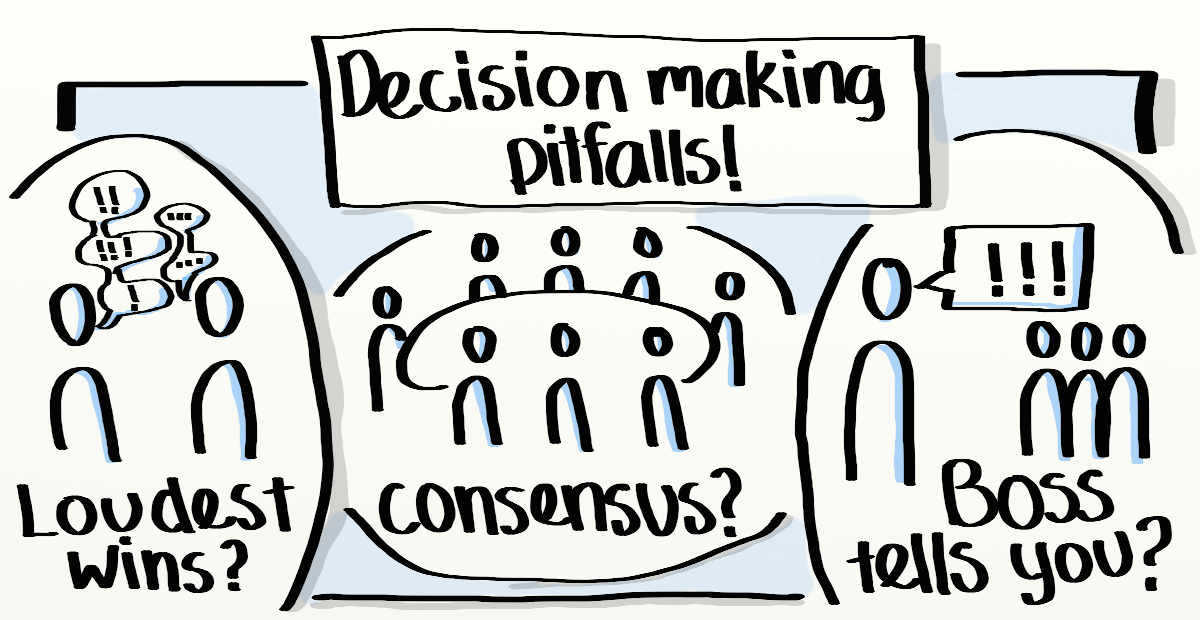

We want to avoid some common pitfalls of decision making:

- The loudest team member decides: loud doesn’t mean right.

- A boss tells you what to do: takes away team ownership and understanding.

- Consensus: this generally means that you either go with the lowest common denominator, or have gridlock.

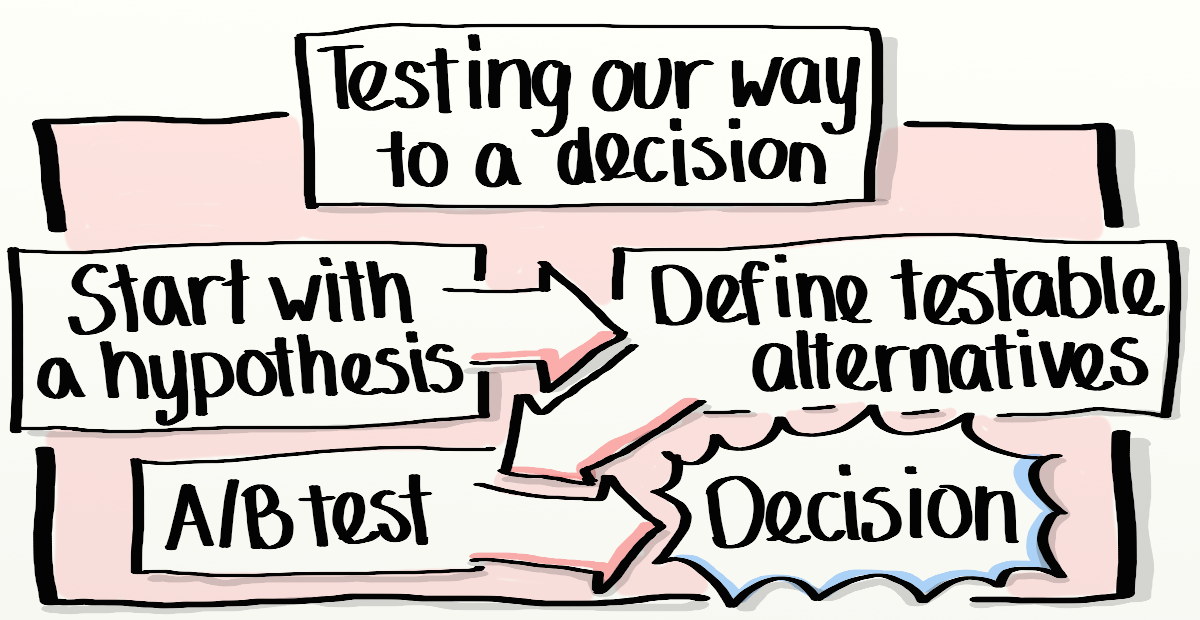

Before we start designing a feature, we come up with a hypothesis. A statement about our product and our users and their relationship. We also specify acceptance criteria.

Next we define different alternatives. What are the possible solutions that address our hypothesis.

We A/B test. We compare all the solutions side by side to figure out which ones perform best according to our acceptance criteria.

Finally we can make a decision. Either we’ve found a solution, or we’ve disproved our hypothesis, or we’ve had to modify our hypothesis.

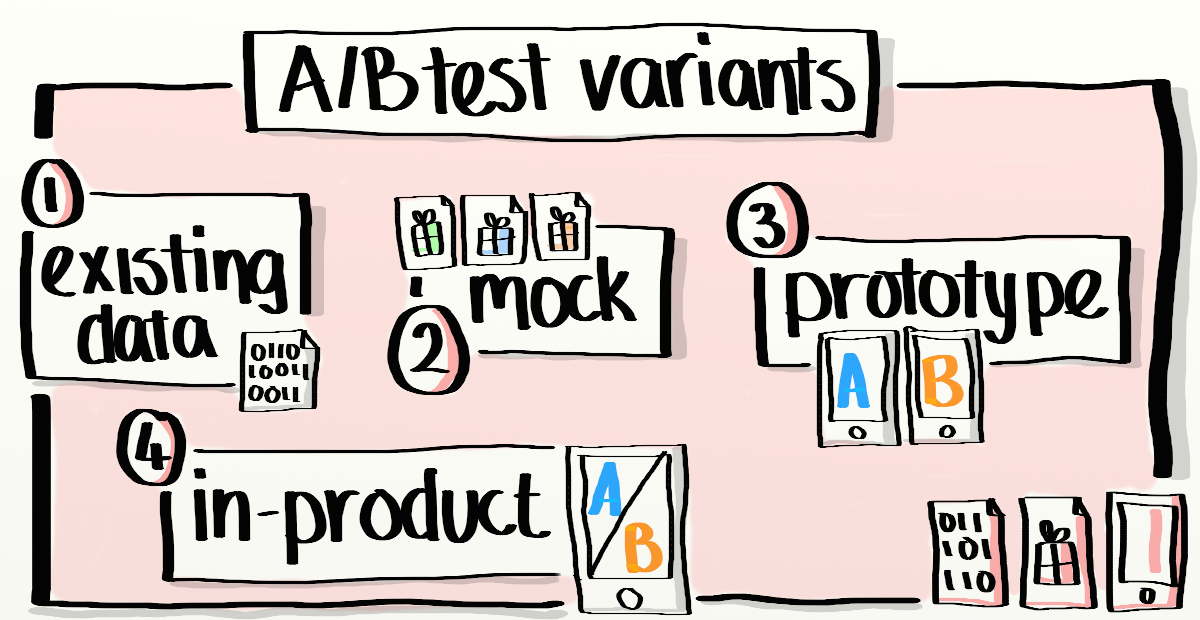

Depending on the kind of hypothesis and the different testable alternatives we interpret A/B testing in a very broad sense. We have 4 different ways of A/B testing:

- Existing data: If we have alternatives that have already been tested out in another game, and if those results are applicable to our case, then we use the existing data to decide which direction to take.

- Mock: Can we test the feature or idea separately from the rest of the game experience? In that case we might mock out the feature on paper, or as a powerpoint, or video. Then test it on a handful of test volunteers.

- Prototype: Maybe we need to have the rest of the game experience to verify a feature. In that case we prototype the different alternatives as separate code branches, then deploy them on different devices and find some test volunteers to try the solution on.

- In-product: This is the real deal, an actual A/B test. We implement the feature as a set of alternatives in the product, then split the traffic assigning each user to a specific alternative, then analyze the results. Sometimes we need to test a feature in a real life situation to determine if it’s good, and our own users are the most representative test group we have.

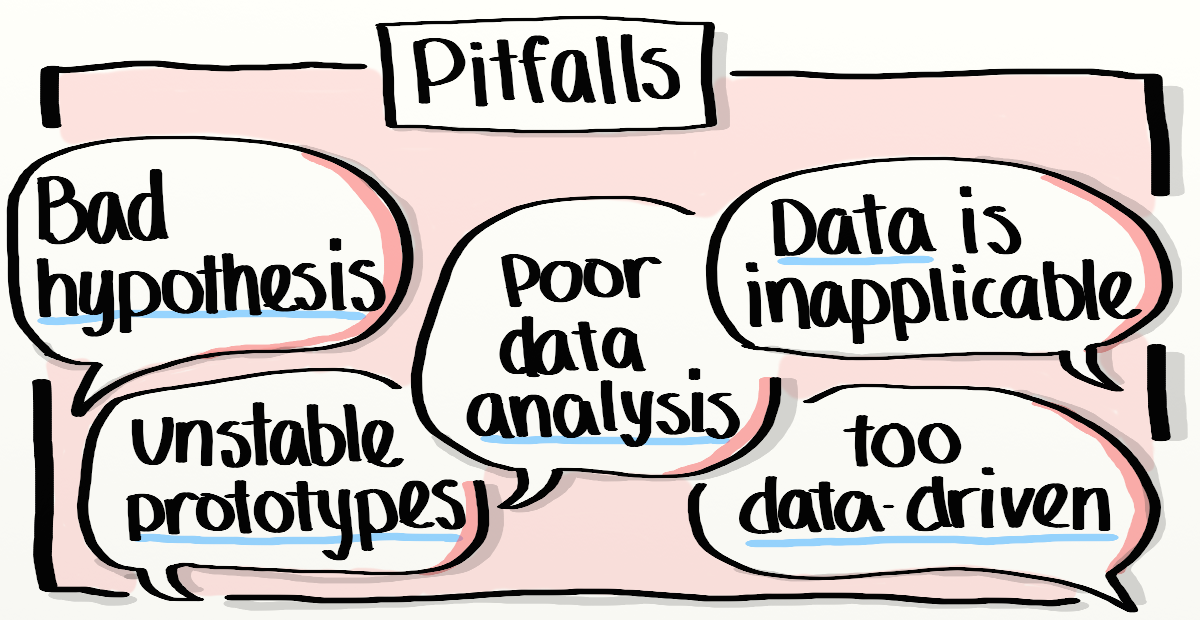

Watch out for:

- Bad hypothesis. Making the wrong assumptions about your users’ needs. you might sub-optimize by fixing a little thing in one area, when you have the most to gain by implementing a different solution altogether. If you’re listening closely, testing your way to a decision will highlight a bad hypothesis.

- Data is inapplicable. In order to be able to verify your ideas against tests you’ve already done, the test conditions need to match your current product conditions. Your data is based on tests from a desktop application, but you’re working on a mobile application. Make sure that the test results correlate in the different contexts before using them to make decisions in your context.

- Poor data analysis. Numbers can be difficult to interpret. Are the test groups valid? Are there other changes being deployed at the same time that affect your test results? Are normal variations in traffic accounted for?

- Too data-driven. Do you determine all your upcoming features based on data you’ve gathered about your user behaviour? You might end up just making small incremental changes, when what you really need to do is overhaul major parts of your product. Use data to help you analyze test results, carefully consider when it should drive your opportunity/development backlog.

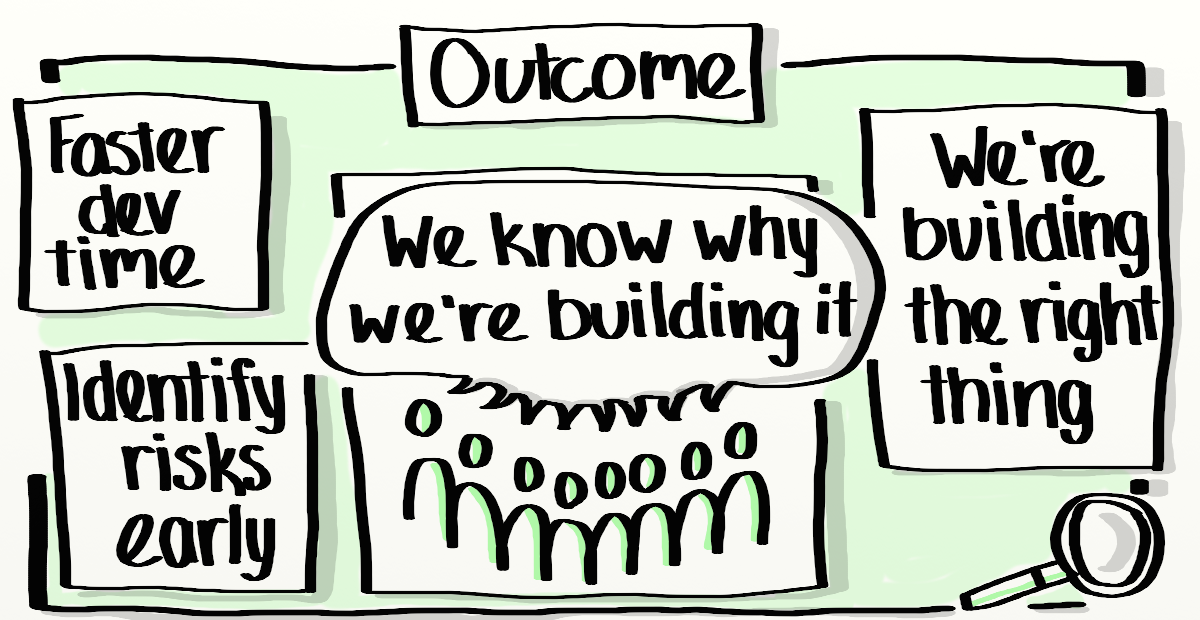

Our teams have experienced the following outcomes from using this method of development:

- The whole team knows why we’re building a feature. This doesn’t directly impact the user at first sight, but when a team understands the product and the user, they build better features.

- We’re building the right thing. If the users are happy, we’re happy 🙂

- Faster development time. We go faster because we build incrementally, and we can stop when a feature is good enough. We also waste less time on features that fail.

- Identify risks early. We’re continuously monitoring how our features perform, even once they’ve been successfully deployed, so we quickly notice downward trends, or problems and address them. Since we also test throughout the development cycle, we can identify issues with planned features and act accordingly.

Previous posts about how we work at King:

Fostering collaboration with a delegation board

What should we build next?

How we developed Candy Crush Soda Saga

Get in touch via my homepage if you have questions or comments!